The web is full of private artifacts, a lot of which may linger on-line lengthy after somebody dies. However what if these relics are used to simulate lifeless family members? It’s already occurring, and AI ethicists warn this actuality is opening ourselves as much as a brand new type of “digital haunting” by “deadbots.”

Individuals have tried to converse with deceased family members by spiritual rites, religious mediums, and even pseudoscientific technological approaches for millennia. However the ongoing curiosity in generative synthetic intelligence presents a completely new risk for grieving family and friends—the potential to work together with chatbot avatars educated on a deceased particular person’s on-line presence and information, together with voice and visible likeness. Whereas nonetheless marketed explicitly as digital approximations, a number of the merchandise supplied by corporations like Replika, HereAfter, and Persona may be (and in some circumstances already are) used to simulate the lifeless.

And whereas it could be troublesome for some to course of this new actuality, and even take it severely, it’s essential to recollect the “digital afterlife” business isn’t only a area of interest market restricted to smaller startups. Simply final yr, Amazon showed off the potential for its Alexa assistant to imitate a deceased cherished one’s voices utilizing solely a brief audio clip.

[Related: Watch a tech billionaire talk to his AI-generated clone.]

AI ethicists and science-fiction authors have explored and anticipated these potential conditions for many years. However for researchers at Cambridge University’s Leverhulme Center for the Future of Intelligence, this unregulated, uncharted “moral minefield” is already right here. And to drive the purpose house, they envisioned three, fictional eventualities that would simply happen any day now.

In a brand new research revealed in Philosophy and Technology, AI ethicists Tomasz Hollanek and Katarzyna Nowaczyk-Basińska relied on a technique known as “design fiction.” First coined by sci-fi creator Bruce Sterling, design fiction refers to “a suspension of disbelief about change achieved by using diegetic prototypes.” Principally, researchers pen believable occasions alongside fabricated visible aids.

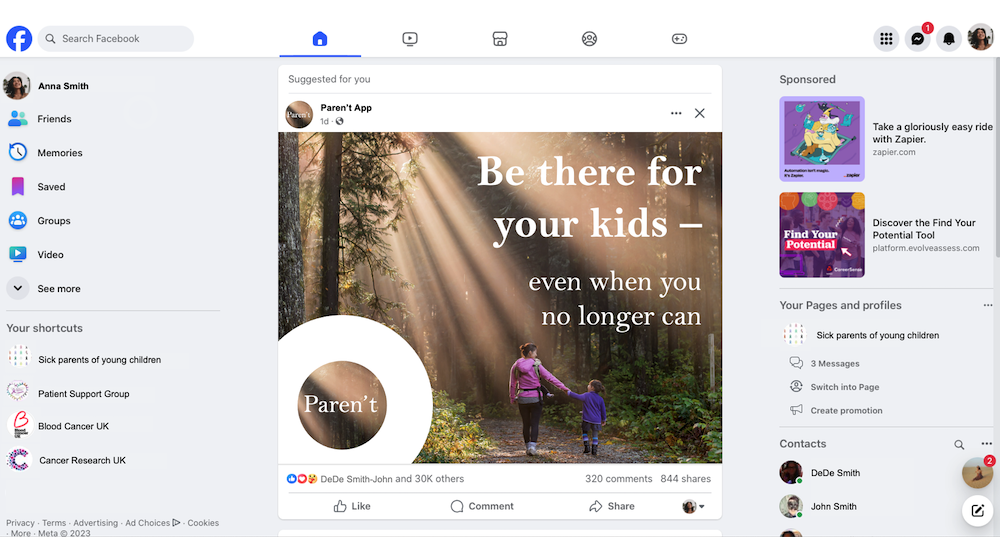

For his or her analysis, Hollanek and Nowaczyk-Basińska imagined three hyperreal eventualities of fictional people working into points with numerous “postmortem presence” corporations, after which made digital props like faux web sites and telephone screenshots. The researchers centered on three distinct demographics—information donors, information recipients, and repair interactants. “Information donors” are the individuals upon whom an AI program is predicated, whereas “information recipients” are outlined as the businesses or entities that will possess the digital info. “Service interactants,” in the meantime, are the relations, buddies, and anybody else who could make the most of a “deadbot” or “ghostbot.”

In a single piece of design fiction, an grownup consumer is impressed by the realism of their deceased grandparent’s chatbot, solely to quickly obtain “premium trial” and meals supply service commercials within the type of their relative’s voice. In one other, a terminally ailing mom creates a deadbot for his or her eight-year-old son to assist them grieve. However in adapting to the kid’s responses, the AI begins to counsel in-person conferences, thus inflicting psychological hurt.

In a closing situation, an aged buyer enrolls in a 20-year AI program subscription within the hopes of comforting their household. Due to the corporate’s phrases of service, nevertheless, their kids and grandchildren can’t droop the service even when they don’t need to use it.

“Fast developments in generative AI imply that just about anybody with web entry and a few fundamental know-how can revive a deceased cherished one,” mentioned Nowaczyk-Basińska. “On the similar time, an individual could depart an AI simulation as a farewell reward for family members who are usually not ready to course of their grief on this method. The rights of each information donors and those that work together with AI afterlife providers needs to be equally safeguarded.”

[Related: A deepfake ‘Joe Biden’ robocall told voters to stay home for primary election.]

“These providers run the danger of inflicting big misery to individuals if they’re subjected to undesirable digital hauntings from alarmingly correct AI recreations of these they’ve misplaced,” Hollanek added. “The potential psychological impact, significantly at an already troublesome time, might be devastating.”

The ethicists consider sure safeguards can and needs to be carried out as quickly as doable to forestall such outcomes. Corporations must develop delicate procedures for “retiring” an avatar, in addition to preserve transparency in how their providers work by danger disclaimers. In the meantime, “re-creation providers” should be restricted to grownup customers solely, whereas additionally respecting the mutual consent of each information donors and their information recipients.

“We have to begin pondering now about how we mitigate the social and psychological dangers of digital immortality,” Nowaczyk-Basińska argues.